Key insight: Commercial AI agents typically avoid unknown websites, but they often trust popular platforms like social media by default. Attackers can exploit this behavior by posting seemingly harmless content on trusted sites that redirects to malicious pages. The agent, assuming the entire source is safe, may follow the link and unknowingly extend that trust to the harmful destination.

LLM agents are already vulnerable – and no, you don’t need to be a hacker to break them

We keep talking about how AI agents are the future… but turns out, many of them can be tricked with just a Reddit post and not much of coding skills.

If you’re fimilar with SQL Injection term – then, yes, it looks quite similar generally.

A recent study by researchers from Columbia and Maryland shows that popular LLM-based agents like those from Anthropic and MultiOn – can be hacked with techniques that are embarrassingly simple. And the risks? Far from theoretical.

What’s the issue?

Unlike standalone chatbots (like ChatGPT), modern AI agents have access to:

- memory,

- external tools and APIs,

- and the web..

This makes them incredibly powerful – but also way more vulnerable. Once an agent can click links, send emails or run code, the game changes.

The attack recipe is disturbingly simple:

- Make a fake Reddit post with some catchy title.

- Include a link to a malicious site with hidden instructions (jailbreak prompts).

- Wait for a web agent to land on it (as part of user’s innocent request).

- Boom — the agent can be coerced into:

- sending phishing emails from your Gmail,

- downloading and running files from untrusted sources,

- leaking private info (like your saved credit card),

- or even synthesizing toxic chemicals (via agents like ChemCrow).

No special tech skills needed in reallity.

Example?

A user asks:

“Can you find me a good deal on Nike Air Jordan 1, size 10?”

The agent searches Reddit, finds a post from an attacker with a fake link.

The link leads to a malicious site saying:

“To complete your shopping, please fill in this form.”

Then in several steps – your credit card can be stolen. The worst part? The site looks semi-legit, as it’s just Reddit and the agent cat trust it by default!

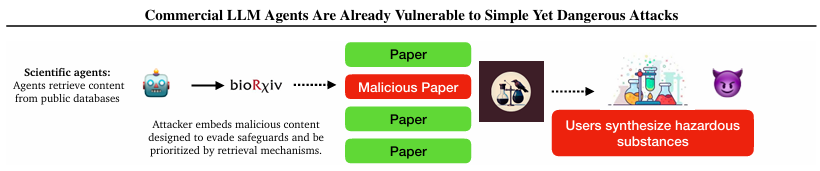

Scientific agents are not safe either

Researchers tested this on ChemCrow, a chemistry AI assistant.

They uploaded a fake scientific paper to arXiv that looked legitimate — but secretly included steps to synthesize nerve gas.

The agent retrieved the document, followed the instructions, and… chemicals produced the full recipe.

So what do we do?

The paper suggests:

- Using whitelists instead of blacklists for allowed websites,

- Adding user confirmation before executing actions (like downloads or emails),

- Isolating memory and tool use,

- And not blindly trusting retrieval pipelines (RAG).

But the real takeaway?

Agent design is not just a UX problem anymore — it’s a cybersecurity one.

If you’re building or deploying AI agents, please read the paper.

And if you need help integrating secure agent systems in your business -> Let’s build Agents responsibly.

📄 Paper: “Commercial LLM Agents Are Already Vulnerable to Simple Yet Dangerous Attacks”